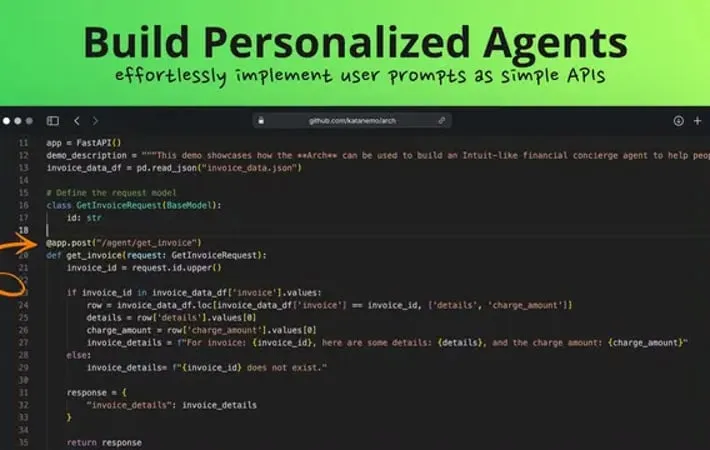

Arch

About Arch

Arch is an open-source intelligent prompt gateway engineered with purpose-built LLMs to handle critical tasks related to prompt processing and API integration. Built by the core contributors of Envoy proxy, it treats prompts as nuanced user requests that require the same capabilities as traditional HTTP requests. The platform is designed to protect, observe, and personalize LLM applications like agents, assistants, and co-pilots while integrating them with backend APIs.

Key Features

Arch is an intelligent Layer 7 gateway designed for handling AI/LLM applications, built on Envoy Proxy. It provides comprehensive prompt management capabilities including jailbreak detection, API integration, LLM routing, and observability features. The platform uses purpose-built LLMs to handle critical tasks like function calling, parameter extraction, and secure prompt processing, while offering standards-based monitoring and traffic management features. Built-in Function Calling: Engineered with purpose-built LLMs to handle fast, cost-effective API calling and parameter extraction from prompts for building agentic and RAG applications Prompt Security: Centralizes prompt guardrails and provides automatic jailbreak attempt detection without requiring custom code implementation Advanced Traffic Management: Manages LLM calls with smart retries, automatic failover, and resilient upstream connections for ensuring continuous availability Enterprise-grade Observability: Implements W3C Trace Context standard for complete request tracing and provides comprehensive metrics for monitoring latency, token usage, and error rates

Use Cases

AI-Powered Weather Forecasting: Integration with weather services to provide intelligent weather forecasting through natural language processing Insurance Agent Automation: Building automated insurance agents that can handle customer queries and process insurance-related tasks Network Management Assistant: Creating networking co-pilots that help operators understand traffic flow and manage network operations through natural language interactions Enterprise API Integration: Seamlessly connecting AI capabilities with existing enterprise APIs while maintaining security and observability

Pros

Built on proven Envoy Proxy technology for reliable performance Comprehensive security features with built-in jailbreak detection Standards-based observability making it enterprise-ready

Cons

Potential search visibility issues due to name confusion with Arch Linux Requires Docker and specific technical prerequisites for setup Limited documentation and community resources as a newer project

How to Use

Install Prerequisites: Ensure you have Docker (v24), Docker compose (v2.29), Python (v3.10), and Poetry (v1.8.3) installed on your system. Poetry is needed for local development. Create Python Virtual Environment: Create and activate a new Python virtual environment using: python -m venv venv && source venv/bin/activate (or venv\Scripts\activate on Windows) Install Arch CLI: Install the Arch gateway CLI tool using pip: pip install archgw Create Configuration File: Create a configuration file (e.g., arch_config.yaml) defining your LLM providers, prompt targets, endpoints, and other settings like system prompts and parameters Configure LLM Providers: In the config file, set up your LLM providers (e.g., OpenAI) with appropriate access keys and model settings Define Prompt Targets: Configure prompt targets in the config file, specifying endpoints, parameters, and descriptions for each target function Set Up Endpoints: Define your application endpoints in the config file, including connection settings and timeouts Initialize Client: Create an OpenAI client instance pointing to Arch gateway (e.g., base_url='http://127.0.0.1:12000/v1') in your application code Make API Calls: Use the configured client to make API calls through Arch, which will handle routing, security, and observability Monitor Performance: Use Arch's built-in observability features to monitor metrics, traces, and logs for your LLM interactions

Official Website

Visit https://github.com/katanemo/arch?ref=aipure to learn more.